本文将说明目前k8s常用的容器调度方式,以作为备忘

Deployment、ReplicationController Deployment 是目前官方推荐的自动化伸缩方式,在创建Deployment的同时,系统也会自动创建一个ReplicaSet用来维护Pod的数量。

ReplicationController与Deployment类似,但目前官方已不推荐使用,在日常开发中,也很少见,Deployment已能满足自动化伸缩的所有需要。

下面用一条命令来快速创建一个Deployment

1 kubectl create deploy nginx --image=nginx --replicas=3

通过命令可以查到系统帮我们创建了一个ReplicaSet

改变Pod的数量

1 kubectl scale deployment nginx --replicas=6

调度到指定的节点 该方式通过NodeSelector实现,NodeSelector的值为标签,在使用之前,先确保节点已被打上标签。

先查看节点的标签

1 kubectl get nodes --show-labels

如果没有标签,可以使用label打上标签

1 kubectl label nodes <your-node-name> disktype=ssd

编写yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 apiVersion: v1 kind: Pod metadata: name: nginx labels: env: test spec: containers: - name: nginx image: nginx imagePullPolicy: IfNotPresent nodeSelector: disktype: ssd

节点亲和性 节点亲和性通过配置NodeAffinity来指定调度的规则或者优先级,有两种选项:

注意,这两种方式一旦Pod按照规则调度成功后,标签如果发生变化,也不再重新调度

requiredDuringSchedulingIgnoredDuringExecution 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 apiVersion: v1 kind: Pod metadata: name: nginx spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: disktype operator: In values: - ssd containers: - name: nginx image: nginx imagePullPolicy: IfNotPresent

preferredDuringSchedulingIgnoredDuringExecution 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 apiVersion: v1 kind: Pod metadata: name: nginx spec: affinity: nodeAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 1 preference: matchExpressions: - key: disktype operator: In values: - ssd containers: - name: nginx image: nginx imagePullPolicy: IfNotPresent

Pod亲和性 与Node亲和性类似,Pod 的亲和性与反亲和性也有两种类型:

requiredDuringSchedulingIgnoredDuringExecution

preferredDuringSchedulingIgnoredDuringExecution

通过设置 topologyKey 属性,来声明相关联的POD,在给定的拓扑区域内,是亲和还是互斥,以下是几种常见的拓扑区域(存在标签中),其他标签可以详见文档

topology.kubernetes.io/zone: 区域

kubernetes.io/hostname: 主机

亲和性 示例:将 Pod 调度到具有相同 topology.kubernetes.io/zone=V 标签的节点上,并且集群中至少有一个位于该可用区的节点上运行着带有 security=S1 标签的 Pod。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 apiVersion: v1 kind: Pod metadata: name: with-pod-affinity spec: affinity: podAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: security operator: In values: - S1 topologyKey: topology.kubernetes.io/zone podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: security operator: In values: - S2 topologyKey: topology.kubernetes.io/zone containers: - name: with-pod-affinity image: registry.k8s.io/pause:2.0

反亲和性 示例:避免将多个带有 app=store 标签的副本部署到同一节点上。 因此,每个独立节点上会创建一个缓存实例。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 apiVersion: apps/v1 kind: Deployment metadata: name: redis-cache spec: selector: matchLabels: app: store replicas: 3 template: metadata: labels: app: store spec: affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: app operator: In values: - store topologyKey: "kubernetes.io/hostname" containers: - name: redis-server image: redis:3.2-alpine

污点和容忍(Taints和Tolerations) 一旦被标记为Taints代表是问题节点,则会拒绝Pod调度该节点,Tolerations则正好相反。

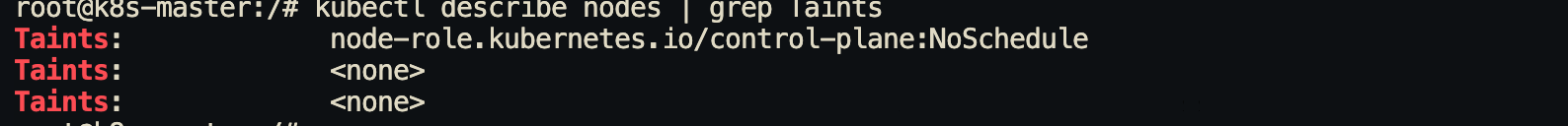

我们可以通过以下命令查询当前的污点

script 1 kubectl describe nodes | grep Taints

设置相应污点

script 1 kubectl taint nodes node1 key1=value1:NoSchedule

删除污点

script 1 kubectl taint nodes node1 key1=value1:NoSchedule-

设置Pod的容忍

1 2 3 4 5 tolerations: - key: "key1" operator: "Equal" value: "value1" effect: "NoSchedule"

通常的使用场景

节点维护,驱除不能容忍的Pod,可以通过cordon或者drain命令来实现

独占节点,特别是那种资源占用非常大Pod,这点非常实用

抢占式调度 通过设置优先级,来保证一些重要的服务能够优先调度

创建PriorityClass 优先级对象,该示例为抢占式,也可以设置非抢占式,也就是等其他pod调度完了再调度

script 1 2 3 4 5 6 7 apiVersion: scheduling.k8s.io/v1

script 1 2 3 4 5 6 7 8 9 10 11 12 apiVersion: v1

DaemonSet 确保每个Node上都有一个pod

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 apiVersion: apps/v1 kind: DaemonSet metadata: name: fluentd-elasticsearch namespace: kube-system labels: k8s-app: fluentd-logging spec: selector: matchLabels: name: fluentd-elasticsearch template: metadata: labels: name: fluentd-elasticsearch spec: tolerations: - key: node-role.kubernetes.io/control-plane operator: Exists effect: NoSchedule - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule containers: - name: fluentd-elasticsearch image: quay.io/fluentd_elasticsearch/fluentd:v2.5.2 resources: limits: memory: 200Mi requests: cpu: 100m memory: 200Mi volumeMounts: - name: varlog mountPath: /var/log terminationGracePeriodSeconds: 30 volumes: - name: varlog hostPath: path: /var/log

Job与CronJob 任务调度,也是开发中常用的Pod调度方式,常常用于执行一次性或者定时脚本

1 2 3 4 5 6 7 8 9 10 11 12 13 14 apiVersion: batch/v1 kind: Job metadata: name: pi spec: template: spec: containers: - name: pi image: perl:5.34.0 command: ["perl" , "-Mbignum=bpi" , "-wle" , "print bpi(2000)" ]restartPolicy: Never backoffLimit: 4

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 apiVersion: batch/v1 kind: CronJob metadata: name: hello spec: schedule: "* * * * *" jobTemplate: spec: template: spec: containers: - name: hello image: busybox:1.28 imagePullPolicy: IfNotPresent command: - /bin/sh - -c - date; echo Hello from the Kubernetes cluster restartPolicy: OnFailure

自定义调度 自定义调度需要根据官方说明进行开发,详细步骤可参考文档

script 1 2 3 4 5 6 7 8 9 10 11 apiVersion: v1